There is one kind of people I have no respect for: those who think they are always right. Doesn't matter why, be that God is on their side, or because they are the good guys, or because they are the smartest, bravest, or strongest, or even if it is because science agrees with them.

Nobody is ever perfect. Everyone can get better by recognizing mistakes and trying not to repeat them. But the moment you stop questioning yourself, you enter a death spiral of bad decisions and more bad decisions to cover them up.

You can fool yourself, you can fool people around you, for a while. But you can't fool the reality. The reality doesn't care that it would hurt your pride by not conforming to your ambitions. You might manage to die before the consequences catch up to you, but they will catch up to those you leave behind. Which isn't fair, but the reality doesn't care about fairness either.

Modded Minecraft be like

I don’t want AI to make me 10x more productive, I want it to give me Fridays off.

So often I run into a software footgun that has been created because it was the easiest way, and technically fits the requirements. And somehow authors often overlook the fact that it won't really serve the users as well, because they are not born with the intrinsic knowledge of where the footgun is and how to avoid it.

"But, if they hold it right, it will work!.."

something

Gary from IT is here to get rid of the bugs in your computer

Did you know? By replacing your coffee with green tea, you can lose up to 92% of what little joy you still have left in your life.

Here's a thing... If a person is known to be a shameless liar, no matter how similar their political views are to my own, there is no reason to believe they would stick to them when it's no longer convenient.

By the same token, if a person is a ruthless asshole, I'd rather not give them free rein to carry out something I am sympathetic to, because the next I may be the subject of their ruthlessness.

It's sad really, we desperately need the best people to lead us, but politics is so antithetical to ethics that truly wonderful people prefer to do other things.

PSA: If your Chrome browser updated and declared uBlock Origin "no longer supported", that's a bit of a misdirection. You can go to "Manage Extensions" page, select uBlock Origin and click "Keep for now". And it will work. For now.

Thinking more on web #decluttering, I now believe that this is largely a matter of control.

My programming career started in web development and even though I was never good at the design part, it was always immensely satisfying to get my web site look just right. Perfect margins, best color scheme, nicest fonts, but all of it according to me.

Herein lies the problem: web sites are largely created to represent the tastes (or corporate style) of the creators, and not the preferences of visitors. I think that the key to a user-firendly web is giving back the control to the users.

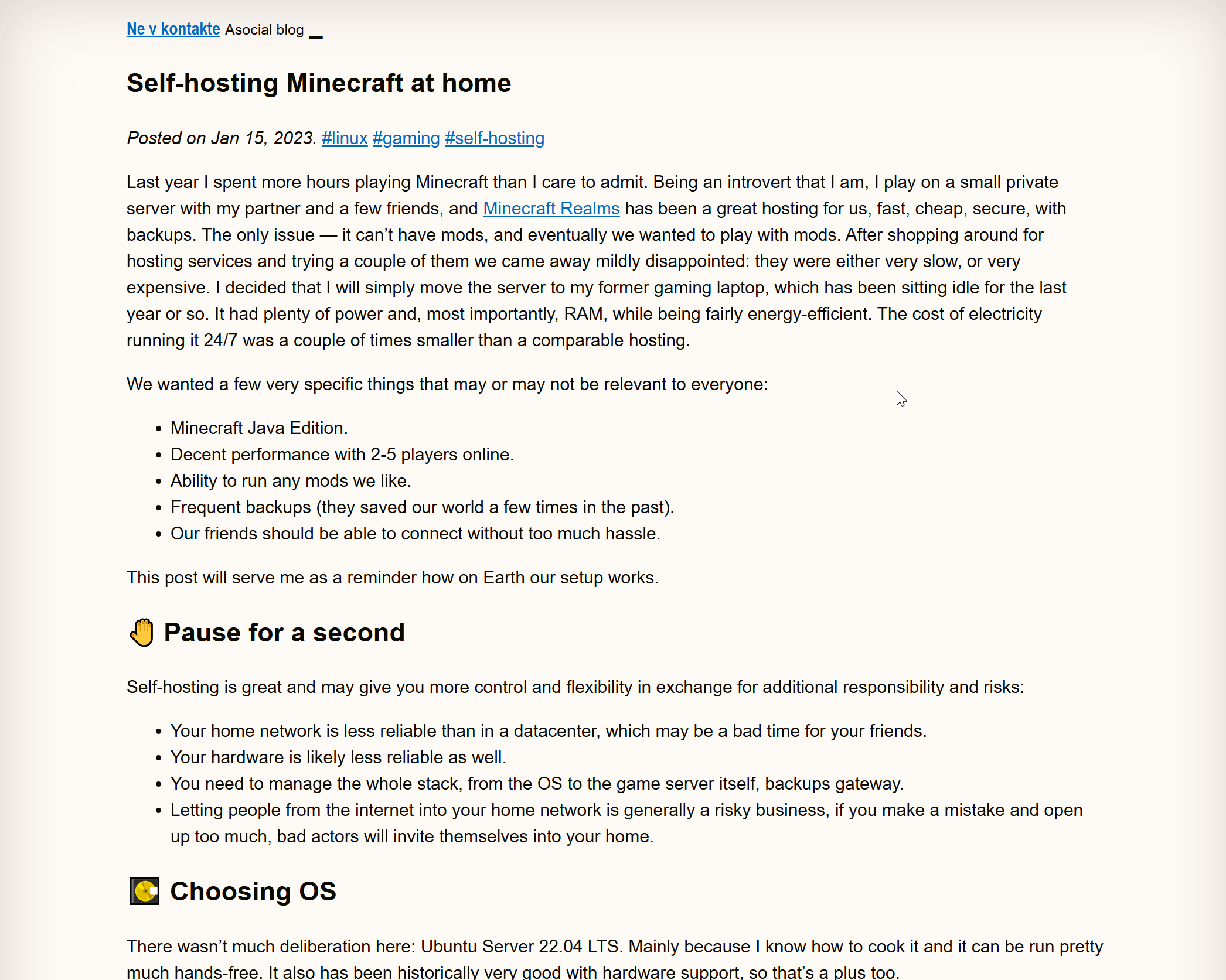

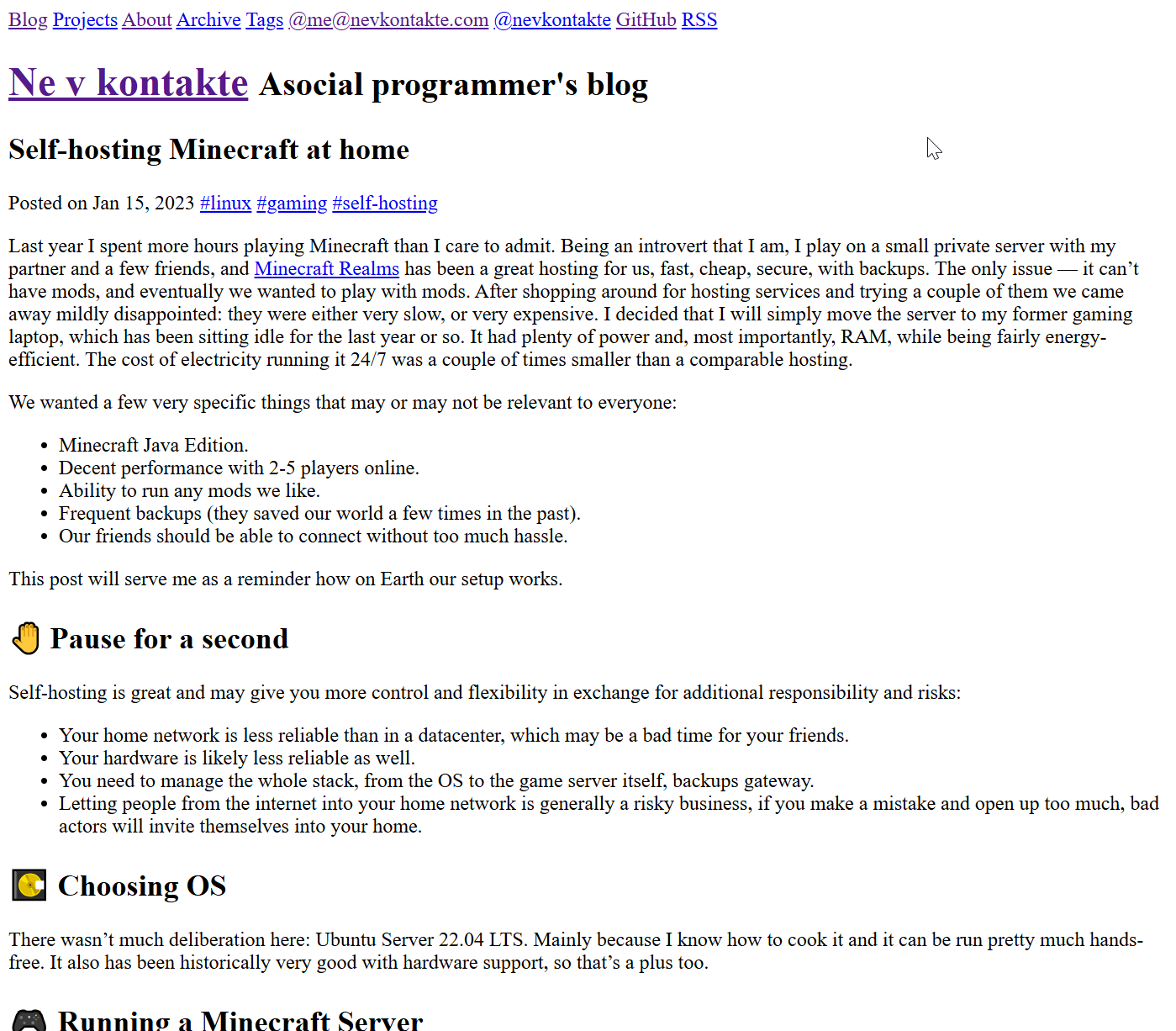

The hard part of it is how much control should we give back. In the attachment are three versions of the same blog post: one with my current blog's theme (the green one, designed according to my tastes), one with all styles stripped off (literally zero custom CSS), and the second is the very minimal styling (mostly browser defaults, but some minimal styling to improve readability).

Which one is better for the user? I dunno.

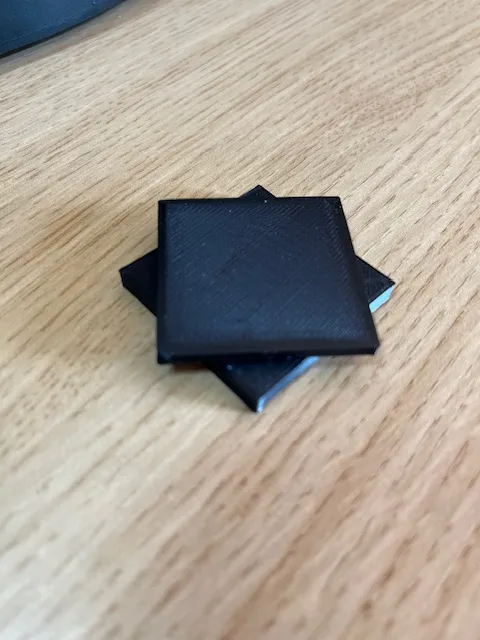

I’m certainly not the first to come up with it, but this seems like an interesting joint design for 3d prints that need some measure of adjustability at assembly time, and being stiff the rest of it.

Just off to check if my keyboard manufacturer requires a (non-exclusive!) licence from me for everything I type on it.

@matthew_d_green It also makes one wonder how other companies that collect and store customer data in "the cloud" have responded to the identical requests from the UK government that we can assume they've all received. The fact that we're only hearing about Apple's response is, I think, telling.

https://issues.chromium.org/issues/40090179 is as sad as it is hilarious...

At some point Chrome OS implemented fashionable (but utterly unusable) overlay scroll bars, and it took 7 years of users complaining for the team to implement a setting to get the normal scroll bars back. Which was harder for them than you'd think for various technical reasons. And non-technical.

But here's the kicker: for the setting to do anything, you gotta go into chrome://flags#overlay-scrollbars and flip that flag first

What I've learned from observing politics and tech is that not having any ethics is good for business

I guess I'm not becoming rich any time soon

master: welcome to my Smart Home

student: wow. how is the light controlled?

master: with this on-off switch

student: i don't see a motor to close the blinds

master: there is none

student: where is the server located?

master: it is not needed

student: excuse me but what is "Smart" about all of this?

master: everything.

in this moment, the student was enlightened

Software development is not art. Nor is it a craft.

It is experimental science — at least when done well. It's the "applied" discipline to the computer science.

A hypothesis and experiment are everywhere throughout the field. A unit test is an experiment to verify that a piece of code does what we think it does. A good unit test is falsifiable — it is designed to fail if the underlying hypothesis is false (hence why TDD preaches writing tests first).

Production canaries are an experiment that verifies the hypothesis of the program's expectations of its environment match the real environment. The best monitoring and alerting I've ever seen was written in the same style: to detect whether an assumption holds true.

It strikes me that a lot of hype bubbles in tech are akin to pseudoscience: there is a kernel of truth to them, but they disregard any sign that opposes their desired conclusion.

Lately I've been contemplating enshittification of the web and how overbearing all the styles and scripts can be. We spend so much time, traffic and watts trying to coax browsers into making things accessible and nice to read.

As an exercise, I saved one of the pages from my blog and purged all CSS, JS, Twitter Bootstrap, fonts and unnecessary markup from it.

And you know what? The result is pretty darn readable and nice to look at.

A part of my blog publishing script is a broken link checker. It's a bit sad to see how year to year I have to update more and more of them to point at Web Archive... Honestly, it and Wikipedia are probably the sites of the highest public value of them all. Please throw a few bucks their way next time you use them.

Printing a tool holder for a 3d printer is a rite of passage of sorts for a 3d printer owner. I’m pretty late to the party, but I’m quite pleased with the results.