Video Game Archive Myrient to Shut Down on March 31

https://hackaday.com/2026/02/27/video-game-archive-myrient-to-shut-down-on-march-31/

I will not verify my identity or age for any online service, other than clicking on an "I am over 18" button, ever. I will simply stop using the service, if it is required.

I encourage you to do the same. It's not "for the children". It's to harvest even more information from you so corporations can try to squeeze even more money out of you and to be able to track you and everything you do.

Do you know what's not accessible? Writing "a11y" in any article or documentation

I will accept it as a convenience in APIs since developers are lazy and can't spell, but fuck off with using it in text

Sometimes I feel like we are already living out Roko's Balisisk's retribution. That would explain a lot of what's going on.

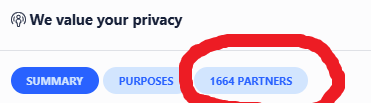

We knew this was coming, but now the clock is running. From Privacy International:

"Yesterday the Trump Administration announced a proposed change in policy for travellers to the U.S. It applies to the powers of data collection by the Customs and Border Police (CBP)."

"If the proposed changes are adopted after the 60-day consultation, then millions of travellers to the U.S. will be forced to use a U.S. government mobile phone app, submit their social media from the last five years and email addresses used in the last ten years, including of family members. They’re also proposing the collection of DNA."

PI linked to and summarized a Federal Register entry describing the proposed requirements:

-All visitors must submit ‘their social media from the last 5 years’

-ESTA (Electronic System for Travel Authorization) applications will include ‘high value data fields’, ‘when feasible’

‘telephone numbers used in the last five years’

-‘email addresses used in the last ten years’

-‘family number telephone numbers (sic) used in the last five years’

-biometrics – face, fingerprint, DNA, and iris

-business telephone numbers used in the last five years

-business email addresses used in the last ten years.

The Federal Register entry says comments are encouraged and

must be submitted (no later than February 9, 2026) to be assured of consideration.

Federal Register entry: https://www.govinfo.gov/content/pkg/FR-2025-12-10/pdf/2025-22461.pdf

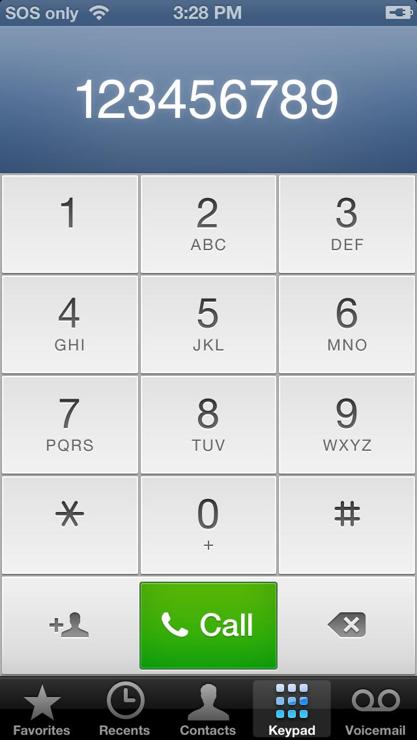

iOS 6 has to be the last iOS with well-designed UI. The UI is intentional and clear. It doesn't try to be anything more than that. It doesn't blur the lines between UI and user content. The UI is actually there as you need it rather than playing peek-a-boo with you. The visual affordances are very much appreciated. You know what is your stuff and what is a control or tool. And it makes *excellent* use of the very small real-estate available to it. It's refreshing to see.

#internet_of_shit

#internet_of_shit

At this point I am % convinced that “smartphone-enabled devices” is just a cost-cutting measure, because throwing together a mobile app is a lot cheaper than designing and manufacturing a usable physical interface of any complexity beyond a power button.

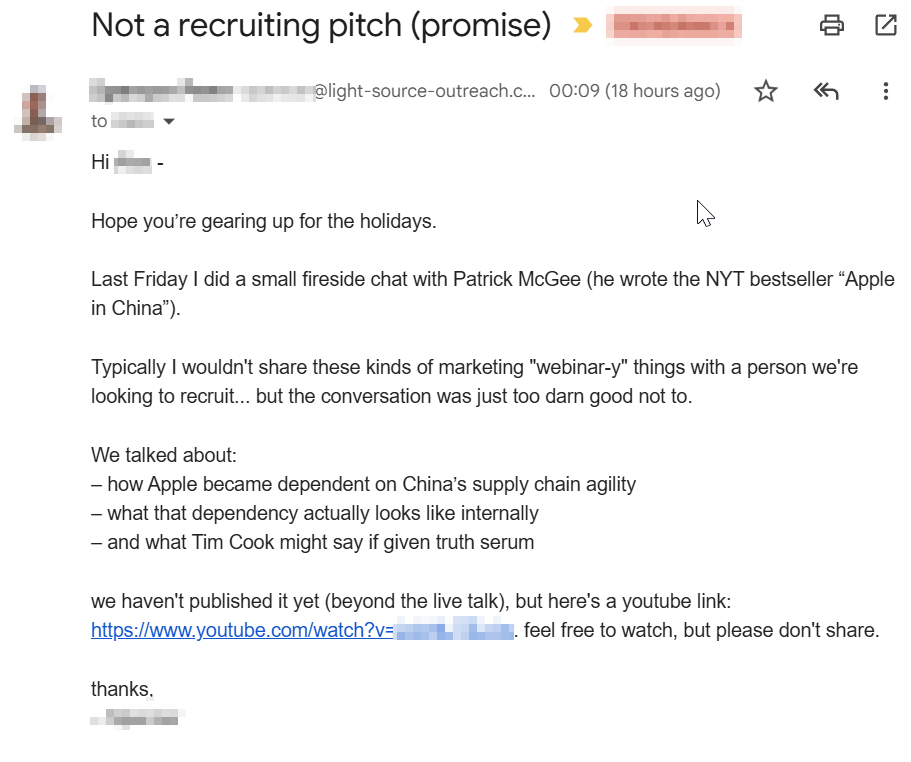

I guess it's that time of the year, when we call out and make fun of really stupid recruiting techniques.

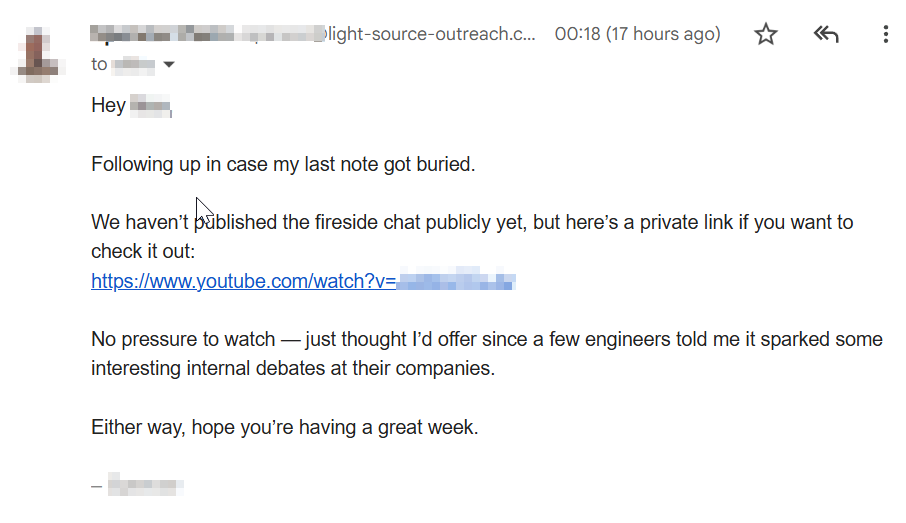

Exhibit 1: An email titled "Not a recruiting pitch (promise)", which contains a link to a webinar the sender recorded with a dude I have no knowledge of, or interest in.

Conclusion 1: gain 1 point for exceeding my expectations and actually not including a recruiting pitch right there and then. Lose 2 points for unsolicited self promotion. Gain 0 response.

Exhibit 2: An email next day "Sharing the talk I mentioned" with a link to exactly the same talk, which I am as uninterested in as I was yesterday. Honestly, if we were friends or at least passing acquaintances, this would be forgivable - if sender knew what I'm into and really thought this talk would be useful to me in some way. But from a total stranger sharing their own video, this is just rude.

Conclusion 2: Lose 4 points for continued self-promotion, and lose one extra point for not reading the room. Gain 0 response.

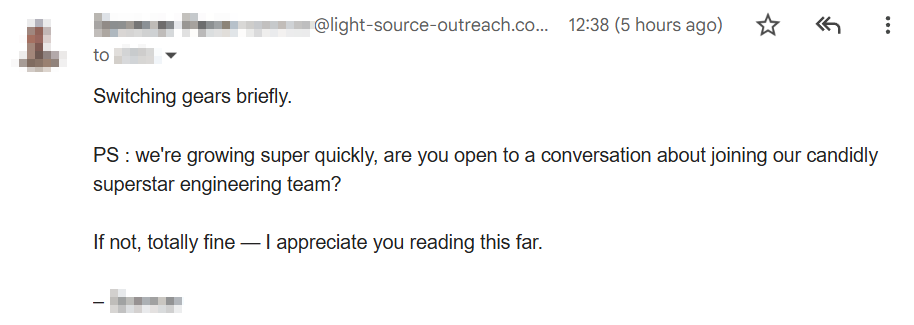

Exhibit 3: An email the day after, the only meaningful text in which is "PS : we're growing super quickly, are you open to a conversation about joining our candidly superstar engineering team?"

Conclusion 3: Aw, fuck, I knew it. Lose 100 points for breaking a promise, and lose 10 more points for damaging my faith in humanity like that. Gain 1 custom-made email filter to have all our future emails sent straight to garbage.

Github actions yeah but what about Github consequences

Can't wait until ML is boring and uncool again.

One of the rare times I could honestly say that I read and agreed with all terms and conditions presented to me.

Recent discussion about the perils of doors in gamedev reminded me of a bug caused by a door in a game you may have heard of called "Half Life 2". Are you sitting comfortably? Then I shall begin.

Internet Archive Hits One Trillion Web Pages

https://hackaday.com/2025/11/18/internet-archive-hits-one-trillion-web-pages/

Played with #Claude Code Web a bit, and can confirm that https://m.nevkontakte.com/o/253f446cde96497dabd09a1056100f66 still holds true.

Two main reasons to drink decaf:

- You get to keep your comfort ritual.

- Every now and then you can drink regular coffee and get superpowers for a day.

Espressif hired a bunch of Rust devs years ago and they have been quietly doing great work supporting Rust on their chips ever since. Still haven’t seen any other chip companies get close.

For a long time I was curious if Claude Code works so well because of Claude (the model) or Code (the CLI tool / agent). This weekend, I tried to find out. Turns out that both matter, but more than anything post-training fine tuning of the model makes a big difference. If the model has been tuned for planning and tool usage in a certain way, it would provide much more reliable results.

Details as in https://nevkontakte.com/2025/swap-ai-brains.html

Watching all of the random things that people have been saying about AWS's #outage yesterday reminded me of a discussion that I had with one of my teams while working at Google.

We were doing longer-term planning, and people were proposing multi-year goals. One (moderately senior) teammate wanted a goal of roughly "MTTR decreases X% per quarter".

So, that sounds nice in theory but it's not how mature services really work. As you fix the easy bugs, you get fewer and fewer trivial outages. "Admin typed dumb thing" mostly goes away with better checking and deployment policies. "Partial backend failure caused cascading failure" is mostly handled by avoiding patterns that cause cascading failures, and then dealing with partial failures as best as they can be handled. "Trivially bad software release broke things" gets handled by improved testing and canarying over time.

Unfortunately, once you get rid of the easy outages, you're left with *weird* stuff. I/O patterns that trigger latent firmware bugs in SSDs, causing accelerated failure fleet-wide, with a multi-year lead time on replacements. Datacenter fires. Natural disasters. CPU bugs. ROMs that get overwritten by excessive reading. Software bugs that cut across 4 or more services and somehow manage to find decade-old fatal flaws. Overloading some resource *that no one knew existed* (per-second-level domain HTTP cookie jar size, undocumented stateless router hardware state limits). Or (one of my favorites) BGP stops converging correctly because several racks were too heavy and their plastic wheels had cracked (yes, really!).

The sorts of things that you *can't* fix fast, because no one even has a good model for what is happening, and none of the usual quick fixes (roll back, drain, loadshed, etc) are helpful.

In this specific service's case, we weren't *quite* to the maturity level where I expected MTTR to start rising, but we were getting close. And, frankly, we didn't track MTTR very closely anyway.

Reading takes on AWS's outage like "when we had our own datacenters, we never had long outages without any ETA for recovery" mostly just means that you never had any of the really *fun* problems.

Remember, the reward for a job well-done is a new, harder job.

Nevkontakte

Nevkontakte

bc100 arc 🔜@eth0

bc100 arc 🔜@eth0